Data scientists are continually on a quest for clean, reliable data, and the work tends to call for data sampling (not every single piece of data can be reviewed and analyzed!). Often, the root cause of problems in modeling outcomes and applications starts in the sampling phase.

Indeed, better “best” practices around cleaning, sampling, and overseeing the ML process are emerging every year. Here are a few of the latest ideas for data scientists to reduce sample bias.

Make time for cleaning. Data scientists live and die by “garbage in, garbage out.” But cleaning data takes time. A 2016 CrowdFlower study cited in Forbes found that cleaning and organizing data monopolizes 60 percent of a data scientist’s time.

Writing in Harvard Business Review, Thomas C. Redman made the case that bad data makes machine learning useless. Not only can inaccurate or poorly managed data be the historical source of problems, but using it as the basis for data science compounds the inaccuracies. In most cases, Redman says these problems can be avoided by the time-intensive work of deep cleaning.

“For training, this means four person-months of cleaning for every person-month building the model, as you must measure quality levels, assess sources, de-duplicate, and clean training data, much as you would for any important analysis. For implementations, it is best to eliminate the root causes of error and so minimize ongoing cleaning.

Leverage cleaning tools. There’s an app for that data cleaning – a lot of apps and tools, actually. But Abizer Jafferjee, ML developer at the Citco Group says data scientists should turn to machine learning tools for the most effective cleaning of data sets:

“[While] rule-based tools and ETL scripts handle each of the data quality issues in isolation…machine-learning solutions for data cleaning consider all types of data quality issues in a holistic way and scale to large datasets.”

Consider smaller data blocks. While no one is trying to create biased AI, CEO of IV.AI Vince Lynch says that testing in controlled environments may not yield the discriminatory results that will arise in real life.

Keen oversight and proper modeling can help prevent ethical problems from arising. Lynch recommends using real-world applications to test algorithms: “It’s unwise, for example, to use test groups on algorithms already in production. Instead, run your statistical methods against real data whenever possible.”

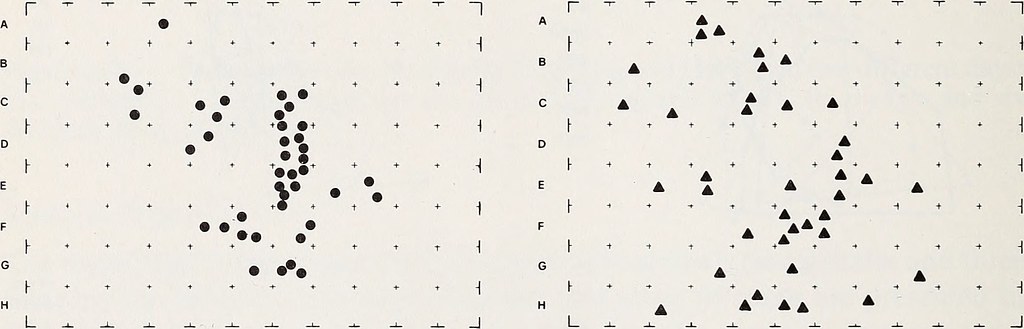

To aid in these “real” tests, a new study looks at the feasibility of smaller random sample data blocks. Empirically, these samples produce similar results to that of larger data sets.

Incorporate counterfactual fairness. In order to rid historical data of bias, Sylvia Chiappa of DeepMind developed a method to test a model for its applicability in “counterfactual” scenarios. This process ensures that the process does not introduce bias in a particular situation, and again in the opposite situation.

Audit for better results next time. It’s imperative to conduct internal audits of the start-to-finish data science project. By reviewing the data cleaning, model development and results interpretation against Google AI recommendations (or another model), potential areas of bias introduction can be identified.

—

Bias outcomes in the real world

Ayodele Odubela, data scientist at SambaSafety, notes the real-world fall-out that can happen when there is a lack of attention on sampling bias. “We’re living in unprecedented times,” she says. “Make sure to check for bias in your models by understanding how you sample segments, especially protected classes. If you’re like me and are at a CRA (consumer reporting agency) it’s likely your work can impact who gets a job, who gets approved for a loan, or who can get certain types of housing (or rent/mortgage relief). We need to take even more care not to cause harm by perpetuating bias with our data models.”

We’re excited to welcome you to the first issue of The Data Standard weekly. The Data Standard is a community of leading data scientists from Merck, General Motors, PayPal, Uber, Google, Chevron, Facebook, and dozens of other great companies.

Each week, we’ll bring you top stories along with our latest episode of The Data Standard Podcast, and tell you about our next live digital event. Scroll down to learn more, and reach out to become a member of The Data Standard community!